Our research is primarily

focused on fixing the programming language disparity in LLM’s.

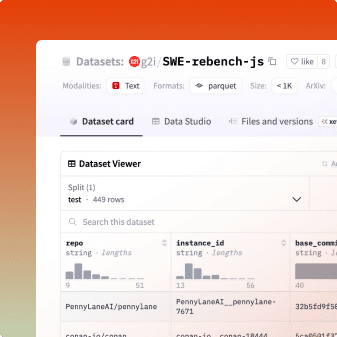

Verifying Multi-SWE-bench TypeScript: Quality Analysis of 210 Tasks

We verified 210 TypeScript benchmark tasks and found that only about 40% provide clear problems with reliable tests leaving 60% with issues such as vague descriptions or flawed tests.

Read More

8 min read

Analyzing AI Agent Performance on TypeScript Tasks: A Deep Dive

We’re launching a research project to analyze how AI coding agents attempt and fail TypeScript tasks in the Multi-SWE benchmark, where performance is currently weakest. By studying real solution trajectories, we’ll pinpoint common failure causes and inefficiencies to guide better agents and cleaner training data.

Read More

3 min read